Conference Report: Vault Linux Storage and Filesystems Conference 2016

Two weeks ago, I attended and spoke at the Vault Linux Storage and Filesystems Conference in the Raleigh Marriot City Center in Raleigh, North Carolina.

Organized by the Linux Foundation, the event was co-located with the Linux Storage, Filesystem and Memory Management Summit that took place in the days before. LSF/MM is an invite-only gathering of key Linux Kernel developers, to discuss kernel development topics from person to person instead of a mailing list.

Located in downtown Raleigh, the venue was easy to reach and provided enough conference rooms with sufficient space. Each day started with a good breakfast, catering during the breaks was also available. A "booth crawl" social event on Wednesday evening provided ample of opportunities to network and chat with the other attendees and take a look at the exhibitor's offerings.

In my estimation, there were around 200-300 attendees and speakers at Vault, mostly from the companies that sponsored the event or had employees giving talks. From what I could tell, only a small fraction of the attendees were not actively involved in the Vault conference (or LSF/MM) in some form or capacity.

It was also my impression that Red Hat noticeably dominated the event by sending lots of employees and developers. Which is probably no surprise, considering that their corporate headquarters are located two blocks from the venue and that they employ many kernel developers. SUSE was also present with an exhibition booth and many engineers. Interestingly, I did not notice anyone from Canonical/Ubuntu.

Many of the presentations were given by the developers themselves, so these sessions were quite technical with a lot of depth, but also of excellent quality. If you wanted to get updates on what's happening in the various Linux storage subsystems and related technologies, Vault is an ideal venue for that.

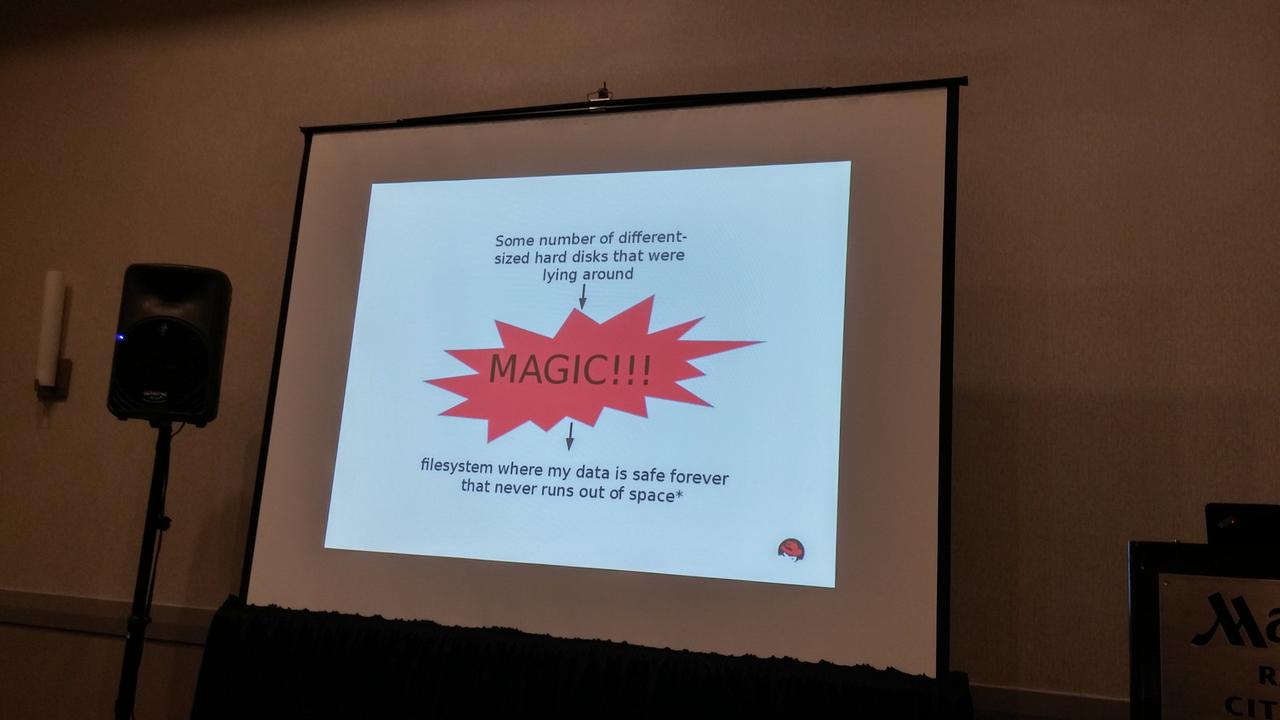

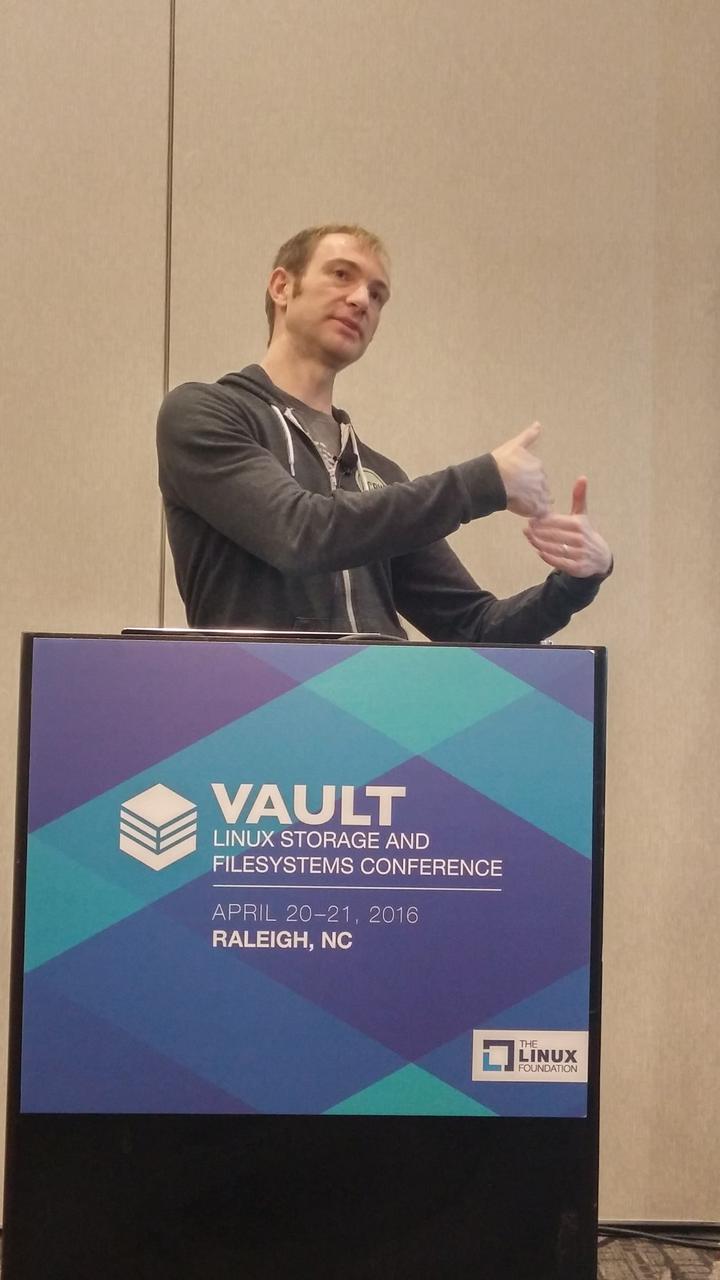

I took some pictures during the conference, here's a small selection:

You can find some more in my Flickr Set and in the Linux Foundation's official Flickr Set.

Both days were started with keynote presentations in the morning. My personal highlights on Tuesday were Sage Weil's Going Big with Open Source and Information Storage in DNA by Nick Goldman, which provided a refreshing and entertaining insight into the bioscience of using DNA for storing digital data like a JPEG image or a PDF file. Granted, the technology is not ready for prime time yet, as the encoding and decoding takes quite some time, but the potential information density and the durability of storing information this way sounded promising.

On Wednesday, the summary of the outcome of the LSF/MM Summit by James Bottomley (IBM), Mel Gorman (SUSE) and Jeff Layton (Primary Data), which was moderated by Martin K. Petersen (Oracle) was quite insightful. The developers covered a wide range of topics, from supporting new hardware like non-volatile memory devices to improvements to the I/O scheduler.

The keynotes were followed by a packed schedule, with 5-6 sessions taking place in parallel. This sometimes made it hard for me to choose, especially with the lack of video streaming or recording of sessions that would have allowed me to review missed sessions afterwards. In my opinion, the lack of video recordings is something that the Linux Foundation should fix for their upcoming conferences.

So I tried to attend as many sessions as I could, to brush up my knowledge on various technologies and projects and to gain more insight into storage topics that are relevant for our openATTIC project.

In addition to that, I also gave a presentation with Lars Marowsky-Brée from SUSE about openATTIC as a Ceph Management System in which we outlined the current status of the Ceph management functionality in openATTIC and how we intend to proceed with the development. While our audience could have been bigger, the feedback to our talk was quite positive.

Of the other sessions I attendend, my personal highlights include:

What's new in RADOS for Jewel? by Samuel Just (Red Hat). The Ceph Jewel release was published this week and brings a number of performance improvements and new functionality. Especially the declaration of a stable CephFS was well received. I look forward to testing the Jewel release with openATTIC.

Froyo: Hassle-free Personal Array Management using XFS and DM by Andy Grover (Red Hat) provided an interesting approach to building a Drobo-like device based on Linux and Open Source technologies like Device Mapper and the XFS file system. The intention is to make it particularly easy for end-users to manage large amounts of storage, by being able to easily swap and replace disk drives to increase storage capacity or faulty devices. It is written in the Rust language, which might be somewhat of a barrier for gathering a developer community around it.

In A Small Case Study: Lessons Learned at Facebook, Chris Mason provided some interesting insight into the Linux kernel work that is performed at Facebook. They actually have a dedicated kernel development and maintenance team that works closely with the upstream kernel development community. They were recently able to significantly reduce the number of patches they apply against the mainline Linux kernel: they are now down to a single patch that actually fitted on a slide of his presentation. They also observed that updates to newer kernel versions brought significant performance improvements. And even though Chris explicitly did not wanted talk about the Btrfs file system, it seems to be serving Facebook quite well - they just recently assigned an additional engineer to working on it.

CephFS as a Service with OpenStack Manila by John Spray (Red Hat) gave an update on what's new for OpenStack in the latest Ceph "Jewel" release, with a focus on the CephFS file system and the related driver for the OpenStack "Manila" project, which aims at providing share file access (e.g. NFS) to VMs. This poses some interesting challenges when it comes to deploying the service and configuring access control, as the current implementation in Manila is quite NFS-centric and makes certain assumptions that do not apply in CephFS, e.g. doing access control based on IP addresses or the very definition of a "share". John also discussed an alternative approach, by which the CephFS file system is mounted on the KVM hypervisor and the VMs use a virtual mount (VSOCK) to access the files. However, this requires work on the kernel, so this may take some time until its generally available and supported by all distributions.

In BlueStore: A New, Faster Storage Backend for Ceph Sage Weil (Red Hat) explained the ongoing developent work of providing an alternative storage backed for the Ceph OSD processes, as the existing filesystem-based implementation suffers from several performance problems and limitations due to the required POSIX semantics. This new storage backend will work on raw block devices directly and is based on the RocksDB embedded key-value database. I found the history of the various storage backends in Ceph quite insightful, which included several failed attempts like the "NewStore" backend, which was a hybrid implementation using both a file system and the RocksDB database. A first experimental version of BlueStore is now available in Ceph "Jewel" - however, the implementation is still under development and the on-disk format is likely to change. The Ceph developers are keen on feedback from early adopters, but one should not yet trust valuable data to it.

In Building a File Sync and Share Mesh Network with Federation Frank Karlitschek, founder of the ownCloud project, talked about his vision of how individual users should be able to securely share information with others, without having to depend on proprietary services. Using ownCloud as an example, he discussed some of the challenges and requirements for setting up a global federated mesh network of independent servers. In his mind, there should be an open protocol for sharing files that works similar to an email service. To foster the development of such an architecture, ownCloud teamed up with Pydio to join the OpenCloudMesh initiative, to support sharing files between ownCloud and Pydio implementations. This was likely Frank's last public appearance as a representative of ownCloud Inc. - he announced his departure just a few days after the conference.

The Panel Discussion Large-scale Enterprise Automation of Open Source File Systems at Clemson University gave an interesting insight into how the university uses off-the-shelf Supermicro servers running OpenZFS on Linux to manage several Petabytes of Storage by using a custom utility named zettaknight that they created. It was interesting to learn about such a large-scale production deployment of OpenZFS on Linux and how they cope with the proliferation of ZFS file systems, snapshots and replication sites. As of today, they have never lost any data due to bugs in ZFS itself, which was quite assuring.

In summary, Vault was a very good conference to attend. I regret that there were no recordings of the sessions, as I sometimes had to decide between multiple interesting talks in the same slot. I enjoyed learning a lot about ongoing developments and technologies and to meet old friends and former colleagues.

Comments

Comments powered by Disqus